As a recovered academic, I feel comfortable writing some stories I have not shared before.

I used to introduce myself as a recovering academic in the years 2018-2022. Only now in 2023, am I comfortable saying I am a recovered academic. Having recovered, I am in a comfortable place to start sharing some personal stories that have not seen the light of day before in written form.

Writing stories is a way for me to remember and to reflect on some of the events that shaped me. It will become my external memory archive as I wade through my personal archives of recordings, emails, and internal memories. I do not yet know the lineup of these posts.

It will not be hard to figure out who is who in these stories, especially if you are familiar with the actors despite my pseudonymization. But that is not the point — in addition to my external memory I hope these stories may help others who are living similar stories feel seen. I love the idea of trauma-informed design, where we use our trauma to inform how we imagine better futures. Maybe revisiting some of the things I needed to recover from so intensely proves informative to myself.

I recognize I am a male presenting white person, first-generation student. I pass as the default even when I may not be at times in minimal ways. In no way do I write these stories to elevate them as the worst things in the world. If anything, I hope they may inspire others to feel the courage to share their stories, no matter how old they are.

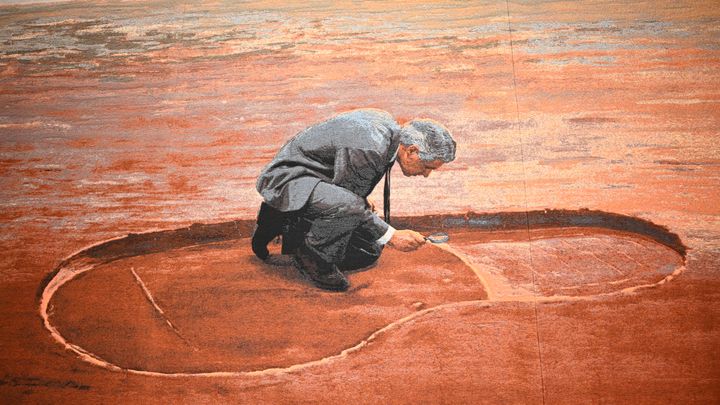

Symbolically, I will open this series of posts with my dissertation's prologue (minus the chapter summary), to set the stage once more.

The history and practice of science is convoluted[1], but when I was a student it was taught to me in a relatively uncomplicated manner. Among the things I remember from my high school science classes are how to convert a metric distance into Astronomical Units (AUs) and that there was something called the research cycle. Those classes presented things such as the AU and the empirical cycle as unambiguous truths. In hindsight, it is difficult to imagine these constructed ideas as historically unambiguous. For example, I was taught the AU as simple arithmetic while that calculation implies accepting a historically complex process full of debate on how an AU should be defined[2]. As such, that calculation was path-dependent, similar to how the history and practice of science in general is also path-dependent [3][4].

Scientific textbooks understandably present a distillation of the scientific process. Not everyone needs the (full) history of discussions after broad consensus has already been reached. This is a useful heuristic for progress but also minimizes (maybe even belittles) the importance of the process[3:1]. As such, textbook science (vademecum science)[5], with which science teaching starts, provides high certainty, little detail, and provides the breeding ground for a view of science as producing certain knowledge. Through this kind of teaching, storybook images of scientists and science might arise, often as the actors and process of discovering absolute truths rather than of uncertain and iterative production of coherent and consistent knowledge. Such storybook images likely result in the impression that scientists versus non-scientists are more objective, rational, skeptical, rigorous, and ethical, even after taking into account educational level [6].

Scientific research articles tend to provide more details and less certainty than scientific textbooks, but still present storified findings that simplify a complicated process into a single, linear narrative[7]. Compared to scientific textbooks, which present a narrative across many studies, scientific articles provide a narrative across relatively few studies. Hence, scientific articles should be relatively better than scientific textbooks for understanding the validity of findings because they get more space to nuance, provide more details, and contextualize research findings. Nonetheless, the linear narrative of the scientific article distills and distorts a complicated non-linear research process and thereby provides little space to encapsulate the full nuance, detail, and context of findings. Moreover, storification of research results requires flexibility, where its manifestation in the flexibility of analyses may be one of the main culprits of false positive findings (i.e., incorrectly claiming an effect)[8] and detracts from accurate reporting. The lack of detail and (excessive) storification go hand in hand with the misrepresentation of event chronology to present a more comprehensible narrative to the reader and researcher. For example, breaks from a main narrative (i.e., nonconfirming results) may be excluded from the reporting. Such misrepresentation becomes particularly problematic if the validity of the presented findings rests on the actual and complete order of events --- as it does in the prevalent epistemological model based on the empirical research cycle [9]. Moreover, the storification within scholarly articles can create highly discordant stories across scholarly articles, leading to conflicting narratives and confusion in research fields or news reports and, ultimately, less coherent understanding of science by both general- and specialized audiences.

When I started as a psychology student in 2009, I implicitly perceived science and scientists in the storybook way. I was the first in my immediate family to go to university, so I had no previous informal education about what 'true' scientists or 'true' science looked like --- I was only influenced by the depictions in the media and popular culture. In other words, I thought scientists were objective, disinterested, skeptical, rigorous, ethical (and predominantly men). The textbook- and article based education I received at the university did not disconfirm or recalibrate this storybook image and, in hindsight, might have served to reinforce it. For example, textbooks provided a decontextualized history that presented the path of discovery as linear, 'the truth' as unequivocal, multiple choice exams which could only receive correct or wrong answers, and certified stories in the form of peer reviewed publications. Granted, the empirical scientist was warranted the storybook qualities exactly because the empirical research cycle provided a way to overcome human biases and provided grounds for the widespread belief that search for 'the truth' was more important than individual gain.

As I progressed throughout my science education, it became clear to me how naive the storybook image of science and the scientist was through a series of events that undercut the very epistemological model that granted these qualities. As a result of these events, I had what I somewhat dramatically called two 'personal crises of epistemological faith in science' (or put plainly: wake up calls). These crises strongly correlated with several major events within the psychology research community and raised doubts about the value of the research I was studying and conducting. Both these crises made me consider leaving scientific research and I am sure I was not alone in experiencing this sentiment.

My first, local crisis of epistemological faith was when the psychology professor who got me interested in research publicly confessed to having fabricated data throughout his academic career [10]. Having been inspired to go down the path of scholarly research by this very professor and having worked as a research assistant for him, I doubted myself and my abilities. I asked myself whether I was critical enough to conduct and notice valid research. After all, I had not had even an inch of suspicion while working with him. Moreover, I wondered what to make of my interest in research, given that the person who got me inspired appeared to be such a bad example to model myself after. This event also unveiled to me the politics of science and how validity, rigor, and 'truth' finding was not a given [11]. Regardless, the self-reported prevalence of fraudulent behaviors among scientists (viz. 2%)[12] was sufficiently low to not undermine the epistemological effort of the scientific collective (although it could still severely distort it). Ultimately, I considered it unlikely that the majority of researchers would be fraudsters like this professor and simply realized that research could fail at various stages (e.g., data sharing, peer review). As a result, I became more skeptical of the certified stories in peer reviewed journals and in my own and other's research. I ultimately shifted my focus towards studying statistics to improve research.

A second, more encompassing epistemological crisis arose when I took a class that indicated that scientists undermine the empirical research cycle at a large scale. These behaviors were sometimes intentional, sometimes unintentional, but often the result of misconceptions and ill procedures in order to play the game of getting published[13]. More specifically, this epistemological crisis originated from learning about how loose application of statistical procedures could produce statistically significant results from pretty much anything[14]. Additionally, these behaviors result in biased publication of results[15] through the invisible (and often unaccountable) hand of peer review[16] that in itself suffers from various misconceptions. This combination potentially leads to a vicious cycle of overestimated (and sometimes false positive) effects, leading to underpowered research that is selectively published, leading to overestimated effects and underpowered research, and so on until that cycle gets disrupted. These issues are not necessarily new and have been discussed for over 40 years in some way or form[17]. Given this longstanding vicious cycle, it seemed unlikely the issues in empirical research would resolve themselves --- they seemed more likely to be further exacerbated if left unattended. Progress on these issues would not be trivial or self-evident, given that previous awareness subsided and attempts to improve the situation did not stick in the long run. It also indicated to me that the reforms needed had to be substantial, because the improvements made over the last decades remained insufficient (although the historical context is highly relevant)[18]. Because of the failed attempts in the past and the awareness of these issues throughout the last six years or so, my epistemological worries are ongoing and oscillate between pessimism and optimism for improvement.

Nonetheless, these two epistemological crises caused me to become increasingly engaged with various initiatives and research domains to actively contribute towards improving science. This was not only my personal way of coping with these crises and more specific incidents, it also felt like an exciting space to contribute to. In late 2012, I was introduced to the concept of Open Science for my first big research project. It seemed evident to me that Open Science was a great way to improve the verifiability of research[19]. The Open Science Framework had launched only recently [20], which is where I started to document my work openly. I found it scary, difficult, and did not know where to start simply because I had never been taught to do science this way nor did anyone really know how. It led me to experiment with these new tools and processes to find out the practicalities of actually making my own work open, and I have continued to do so ever since. It made me work in a more reproducible, open manner, and also led me to become engaged in what are often called the Open Access and Open Science movements. Both these movements aim to make knowledge available to all in various ways, going beyond dumping excessive amounts of information but also making it comprehensible by providing clear documentation to for example data. Not only are the communities behind these movements supportive in educating each other in open practices, they also activated me to help others see the value of Open Science and how to implement it [21]. Through this, activism within the realm of science became part of my daily scientific practice.

Actively improving science through doing research became the main motivation for me to pursue a PhD project. Initially, we set out to focus purely on statistical detection of data fabrication (linking back to my first epistemological crisis). The proposed methods to detect data fabrication had not been tested widely nor validated and there was a clear opportunity for a valuable contribution. Rather quickly, our attention widened towards a broader set of issues, resulting in a broad perspective on issues in science by looking at not only data fabrication, but also at questionable research practices, statistical results and the reporting thereof, complemented by thinking about incentivizing rigorous practices.

Wootton, David. 2015. The Invention of Science: A New History of the Scientific Revolution. Toronto, Canada: Allen Lane. ISBN:9781846142109. ↩︎

Latour, Bruno, and Steve Woolgard. 1986. Laboratory Life: The Construction of Scientific Facts. Princeton, NJ: Princeton University Press. ISBN:0692094187. ↩︎ ↩︎

Gelman, Andrew, and Eric Loken. 2013. “The Garden of Forking Paths: Why Multiple Comparisons Can Be a Problem, Even When There Is No ‘Fishing Expedition’ or ‘P-Hacking’ and the Research Hypothesis Was Posited Ahead of Time.” https://wayback.archive.org/web/20180712075516/http://www.stat.columbia.edu/~gelman/research/unpublished/p_hacking.pdf. ↩︎

Fleck, Ludwig. 1984. Genesis and Development of a Scientific Fact. Chicago, IL: University of Chicago Press. ISBN:9780226253251. ↩︎

De Groot, A.D. 1994. Methodologie: Grondslagen van Onderzoek En Denken in de Gedragswetenschappen [Methodology: Foundations of Research and Thinking in the Behavioral Sciences]. Assen, the Netherlands: Van Gorcum. ISBN:9789023228912. ↩︎

Stapel, Diederik A. 2012. Ontsporing. Amsterdam, the Netherlands: Prometheus. ISBN:9789044623123. ↩︎

See for example Broad, William, and Nicholas Wade. 1983. Betrayers of the Truth. New York, NY: Simon; Schuster. ISBN:9780671447694. ↩︎

Harnad, Stevan. 2000. "The Invisible Hand of Peer Review." Exploit Interactive. http://cogprints.org/1646/. ↩︎

doi:10.1037/0033-2909.105.2.309;doi:10.1037/h0045186;doi:10.1037/0033-2909.86.3.638;doi:10.2466/03.11.pms.112.2.331-348;doi:10.1207/s15327957pspr0203_4;doi:10.1056/nejm199310143291613 ↩︎

See also doi:10.15200/winn.144232.26366 ↩︎

My first steps taken in doi:10.6084/m9.figshare.928315.v2 ↩︎